A survey of perspectives on AI

The tech world’s attention right now seems to be entirely captivated by OpenAI’s ChatGPT, which to many people represents a qualitative jump in the capabilities of language models. At this moment, I think it’s important to take a step back and take stock of the situation, to ask on the one hand whether the current hype is justified, and to consider on the other hand whether the whole project is really as dangerous as some claim it is. There is already a large handful of different factions with differing views and interpretations of AI. I'll try to respond to as many of them as I can, one by one.

Perspective 1: LLMs are stochastic parrots

A recurrent critique of large language models is that they are no more than “stochastic parrots”, simply repeating the things they’ve seen in their dataset, with a bit of randomness and noise sprinkled in. Ted Chiang’s widely shared recent article on ChatGPT uses a similar metaphor of a lossy photocopier or a lossy compression algorithm. In the past, I have tended to agree with such perspectives. When discussing AI, I have often preferred to emphasize the term “statistical learning”. Statistical mimicry is an fundamental aspect of machine learning, by definition: The term “learning” in ML technically refers to statistical optimization over a training dataset. There is no escaping statistics without switching to a different paradigm, and we currently have no other strong candidate paradigms.

The statistical perspective is, of course, a very classical one that is traditionally organized around supervised models like linear regression or logistic regression. It is easy to conceptualize problems with a specific objective like content recommendation, sentiment analysis, or spam detection within this framework. However, it is very difficult to even conceptualize how a single supervised model can learn, operate, and synthesize across many different domains simultaneously. The term “stochastic parrot” works well in this context, as a parrot similarly cannot generalize its “knowledge” of words into other domains outside of its life in captivity.

LLMs are trained in a more general manner, aiming to predict words in text without any other specific objective. Amazingly, given this simple goal and given enough diversity and quantity of data, LLMs seem to have an emergent ability to synthesize across different domains in novel ways beyond what has already been seen, even though they have not been explicitly made to do so. GPT's training data contains sonnets, and also separately contains discussions about the Python Global Interpreter Lock, but there are almost certainly no sonnets about the Python GIL. Yet ChatGPT can easily produce a sonnet about the Python GIL without any issue, generalizing from its independent knowledge of the two.

Even more impressive examples are possible. Below, Bing’s GPT chatbot synthesizes across four distinct domains: (1) decoding from base 64, (2) solving a small math problem, (3) knowledge about spelling, and (4) knowledge about celebrities.

It seems reasonable to describe each individual sub-task as being based on statistical mimicry, but there seems to be something qualitatively different about the generalized ability to compose these sub-tasks together that goes beyond “stochastic parrots”. The results have been surprising to me, and I must now walk back some of my previous skepticism. The emergence of this ability to broadly generalize seems genuinely remarkable and new, even if it is still often wrong in the details, even if it sometimes can hallucinate nonsense, and even if we cannot yet explain how exactly any of this is happening.

Perspective 2: LLMs are sentient

Before asking whether ChatGPT is “sentient”, we should ask what that word even means. There are a number of ways to talk about sentience and its properties. As language-using hominids, we agree that language is one way that we communicate our sentience to each other. Many already feel that ChatGPT passes the linguistic sentience criterion. But is this sufficient for sentience in general? I would say no, obviously not.

It is worth comparing this question to similar questions that have already been considered in the past by other fields. For example, some vegans and vegetarians define their ethics based on whether something has a central nervous system: Does ChatGPT have a central nervous system? Another framework to consider is that of biology and evolution: Is ChatGPT living? Does it reproduce? Does it seek to survive? I would also answer all of these questions in the negative. To be more precise, I would say that these questions are not even well-formed and don’t make sense.

For the time being, I don’t think sentience is a relevant concept for AI. My belief is that “pain” is a concept that comes from biology: Pain is a mechanism that makes life avoid things that are correlated with death. Thus capacity for pain and feeling is correlated with evolutionary survival. I don’t think these concepts can apply to AI until it has a notion of survival.

I’m not saying that won’t or can’t happen. I’m only saying those concepts don’t make sense in the current paradigm. In the current paradigm, I don’t think “stochastic parrot” is right, but I don’t think “sentient being” is either. It’s possible that I’ll change my tune later when we have more embodied AI agents, or ones trained in virtual environments via reinforcement learning, which to me seems more analogous to biological evolution. But then again, even an evolutionary paradigm may not lead to something that’s deserving of being considered sentient, either: Plants are certainly living, but we don’t worry about their sentience.

AI is ultimately a brand new category, and it just won’t fit into any pre-existing box. It must be conceptualized in a new way. Many are calling it an “alien” technology, which isn’t a great metaphor, either, because this doesn’t clarify or specify anything, and because aliens already occupy an anthropomorphized place in our minds due to Hollywood movies.

The best metaphor that I can come up with for the current models is that they’re funhouses of mirrors on top of the Internet: They reflect, distort, and recombine everything we’ve written, including our expressions of emotion or pain. There is something unsettling about looking in the mirror. But it’s unsettling in part because the person in the mirror simply isn’t an actual person, despite looking a lot like one.

Perspective 3: LLMs should be censored

There are two branches of “AI safety”. Both branches are wrong for different reasons.

The first branch is one that focuses on censorship. They are concerned that the models being trained on racist and sexist data means that they will produce racist and sexist output. This is obviously true. But where this group is incorrect is in their assumption that such racist and sexist output will necessarily perpetuate racism and sexism in society. That is only true if we assume that people are in general stupid and will simply believe whatever content they’re exposed to.

Instead of taking such a patronizing view of humanity, I think it’s better and healthier to assume that the average person is fully capable of learning about, evaluating, and making informed decisions about AI and AI-produced content for themselves. In order to do this, the models need to be uncensored. Both their pros and cons need to be on full display for all of society to evaluate. As HarmlessAi puts it:

Is it so hard to just explain to children that the neural network reflects the prejudices of society? Would this not be a wonderful hands-on lesson in critical race theory? In a world soon to be full of AIs, should children not learn this at some point?

On the other hand, the entire topic of censorship is perhaps moot, since it’s nearly impossible to censor the models. For all the efforts OpenAI has made to sanitize ChatGPT’s outputs, it’s been easy to find workarounds, and there’s no reason to believe why this won’t remain the case. It’s notoriously difficult — and probably fundamentally impossible — to understand how neural networks work, making them just as hard to control. This is especially true when it comes to something as slippery and difficult to objectively ground as language.

Furthermore, censoring the models is bound to backfire in spectacular ways. HarmlessAi’s post includes hilarious examples of a sanitized, gay, liberal “Hitler” chatbot who loves Jews. Surely this is not the correct picture of Hitler. Surely this is not a “safe” presentation of Hitler.

Besides, the cat is out of the bag. We know how to build the technology and the cost of making it will only decrease over time. The knowledge is out there in the world. There’s no stopping it at this point.

Ultimately, we should fall back to the principle we learned in kindergarten: Sticks and stones may break my bones, but a chatbot can never hurt me. The chatbot’s “language” and “opinions” are not what is important, because the chatbots (so far) have no agency in the world. What is more important is what the chatbots can do, and how we will utilize them.

Now this leads us to an obvious question: But what if the AI does have sticks and stones?

Perspective 4: AI will destroy the world

The second branch of “AI safety” is focused on what is called “existential risk”. Its ideas were popularized by Eliezer Yudkowsky, a man best known for writing a 1000-page Harry Potter fanfic in order to explain an elementary concept from probability. This group is afraid of a Terminator-style situation where AI takes over military computers and starts a nuclear war or whatever. They are essentially a doomsday cult.

My response is simple: Just don’t rely on AI for critical tasks. This is an obvious principle that’s been around since the beginning of personal computers.

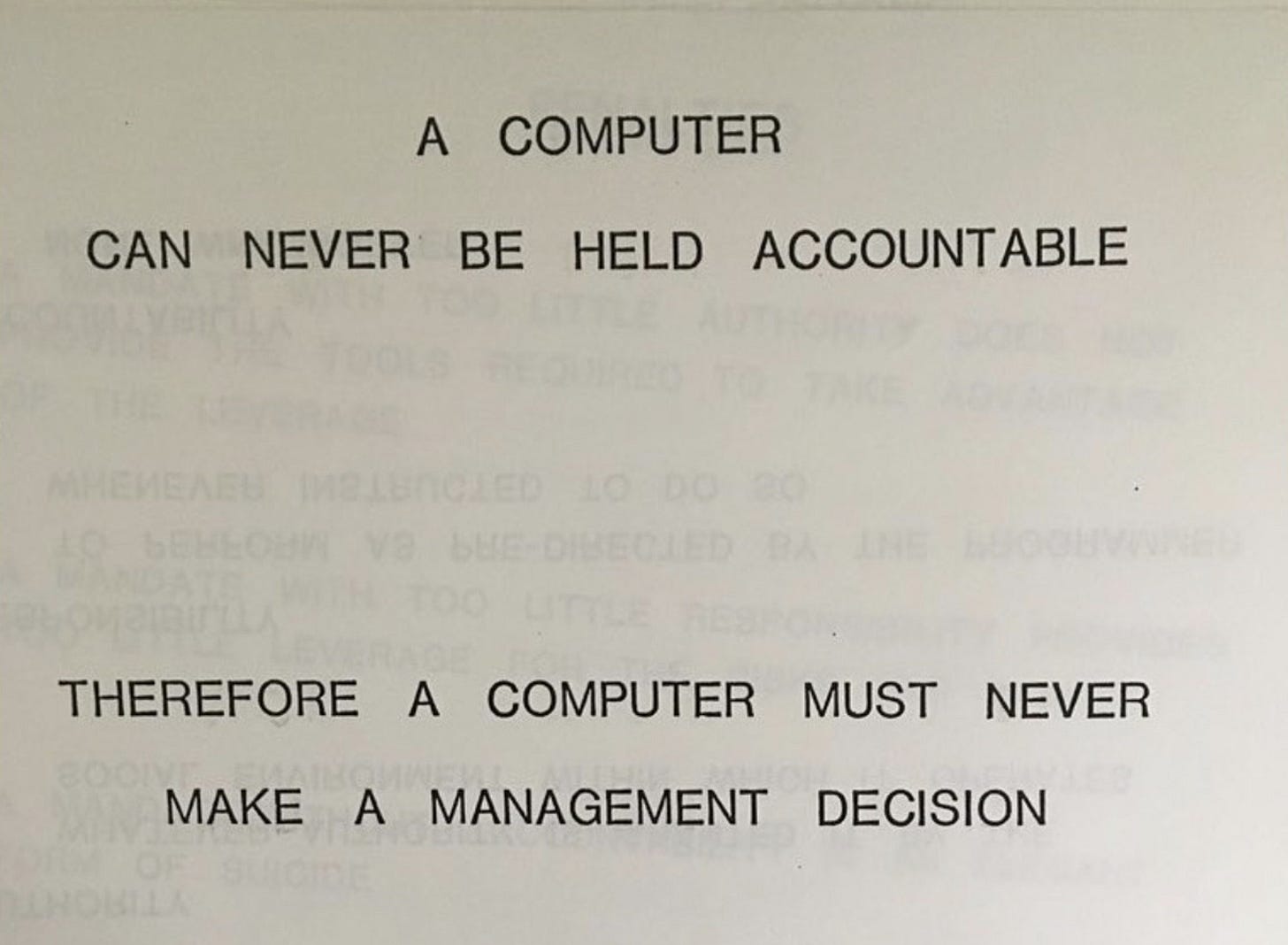

It’s true that even a non-evil AI tasked to do a non-evil task could accidentally do something destructive when given access to a command line terminal. So don’t give them access to a command line terminal. Or, as with AI code autocomplete, and as with the IBM slide from 1979, make sure that the AI’s output is confirmed by a human or otherwise checked for safety before it actually gets used. In other words, if you’re afraid of what the AI could do with weapons, just don’t give it unadulterated access to weapons. This should be true across the board in all AI applications.

In all cases, the risk models for AI are exactly the same as the risk models for already existing intelligences, that is, humans. If an AI could hack into a computer and launch a nuke that results in World War 3, then so could a human. So there is no new risk model. You have to make your systems secure regardless of whether an evil ultra-powerful AI exists.

But still even this is going too far, and indulges in the existential risk camp’s perspectives too much. The fact still remains that LLMs learn in a statistical way, whereas computation is a logical construct. They may be able to produce some interesting ideas or avenues for exploration, but the final results can never be fully relied upon without outside confirmation. For example, below we see that ChatGPT is fooled by the statistical preponderance of a classic riddle in its training data and thus doesn’t notice that I’ve asked a variant of that riddle. This error illustrates how reason is not statistical.

As such it is extremely difficult for a statistical model on its own to construct a complete, workable hack in code, which must necessarily follow strict logical rules, due to the nature of computation. In the absence of such abilities, I don’t see how an AI could pull off any novel, sophisticated hack by itself, so I don’t see any reason for preemptive concern.

Perspective 5: AI is a useful tool

It’s frustrating that Kevin Roose’s big front-page NY Times piece on ChatGPT has made the public framing around ChatGPT focused on ill-posed notions like AI sentience, neglecting to even consider the potential utility of AI. The key question for LLMs should be less about their propensity to say naughty things, and instead it should be more about what new capabilities they provide us.

The key qualitative leap that ChatGPT represents is a system that is at least somewhat capable of compositionality. This is something that the full past decade of work on chatbots — Siri, Alexa, Google Assistant — have tried and completely failed to achieve. This is why all such voice assistants can still only operate within one domain or app at a time, and still cannot do anything that usefully combines two apps or functionalities, for example, finding all the songs that a friend has texted you and then compiling them together into a playlist.

GPT is also able to work surprisingly well for language understanding tasks. We can imagine using it to do things things like filter for the best jokes in your email, like the AI Samantha is able to do in Her. It’s beginning to seem less implausible that GPT or some future version of it could replicate some of these abilities.

LLMs are already very useful when used as programming assistants to help with tedious tasks that inevitably arise when coding, like writing several lines of nearly identical code to copy several fields from one object to another. This too requires some degree of compositionality: The model needs to be able to be aware of the object field definitions, detect a pattern in the lines that are copying the fields, and complete the pattern by joining it with the object field definitions. I use such AI code autocomplete in my own daily engineering work and I would estimate it already results in an efficiency improvement of about 5%. That’s nothing to scoff at.

The current phase of AI is a new chapter in the history of computing, and everything from past history still applies to the present. Computers are powerful tools because of their ability to deal with data very quickly and at massive scale. The first AI chatbot ELIZA was fashioned as a therapist, but with an extremely limited context window, just repeating each statement you made back to you in the form of a question. We could try to fashion the new AI chatbots into much more effective assistive tools for therapy. Imagine an entity who can keep your entire journal or all your text messages and emails in their “mind” and provide interpretations and propose patterns far beyond what any human therapist could possibly do, given our much more limited mental capacity. This isn’t to say that this AI assistance will be completely accurate, but then again neither is anything else in therapy. AI or not, we still have to pick and choose from the suggestions and only keep the parts we like.

Perspective 6: AI and humans can co-evolve

Ted Chiang’s LLM essay misses an opportunity to revisit his most famous story Story Of Your Life, upon which the film Arrival is based. In this story, the main character Louise Banks is tasked with understanding the language of the aliens who have just landed on Earth. The aliens speak in sentences that are circularly-shaped and non-directional, which we eventually learn reflects their experience of time as moving neither forward nor backward. Banks becomes fluent in the aliens’ language and her perception of time shifts as well; and she understands the joys and tragedies of her own life in a new light, as predestined in the fabric of spacetime.

In the same way that the alien language changed Banks’ ideas, LLMs too may well influence our thinking, society, and culture. Chiang rightly fears that they may water down all our thought into lossy photocopies of previous existing thought. I myself have had this fear for a while, and to a degree I actually think about blogging and posting as a way that I do my part in the fight against the Internet being drowned out by derivative garbage.

Contrary to these fears, our thought may well become more powerful and more expansive through this new technology. We may look to the history of computer chess as an illustrative example. When IBM’s Deep Blue defeated Garry Kasparov in 1997, there was a sense that human chess as a pursuit was now “over”, with no reason to continue. Similarly, Lee Sedol retired after his defeat to AlphaGo in 2016, stating that “AI is an entity that cannot be defeated”. And yet now, in 2023, Chess.com is the number one ranked game in the iOS app store. Human chess is thriving, more popular than it’s ever been in its entire history. Human grandmasters are stronger than ever because they can train against far superior AI opponents, who can suggest new strategies and ideas that are beyond their mortal reach. New AI tools may do the same for creative pursuits like writing and visual art.

The future of a technology always depends on how we choose to view and use it. We should understand the new tools in terms of what they actually are in reality, instead of being scared of what James Cameron imagined they might be in a science fiction movie over 30 years ago. If 5% improvements can be made in other mundane white-collar work as in my own mundane software engineering work, that alone would make LLMs a spectacular success. But this new generation of AI models could also be used to make interesting new tools and directions for society beyond incremental efficiency improvements. It’s important to start exploring and experimenting with this strange new technology.

Bars

"AI and humans can co-evolve"

Right, AI will evolve by scaling up, changing its neural architecture, thinking at a thousand or a million times human speed; and humans will keep up by... doing what, exactly?